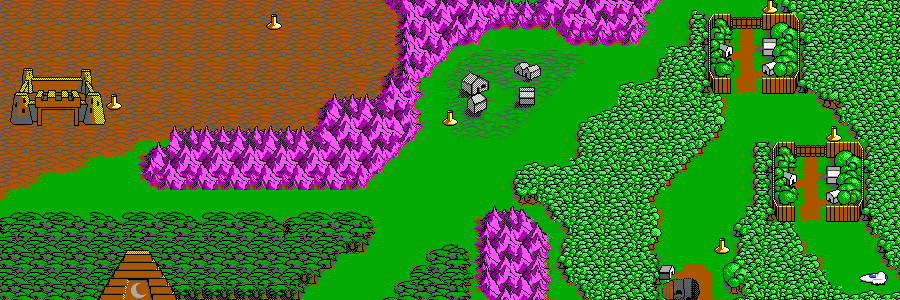

Latest blog: Commander Keen 4-6 file formats 20 Dec, 2025

Recently I made Dopefish Decoder, a Rust tool for dumping the graphics from a very old-school Id software game: Commander Keen 4-6. It was a bit of work (fun work though) combining information from various sources to figure out how to read it all, so here’s the formats in rough EBNF! Further explanations are afterwards for the more complex elements.

Files

- Graphics:

- graph_head

- graph_dict

- egagraph

- Maps:

- map_head

- gamemaps

Non-file files

The map_head/graph_head/graph_dict “files” are actually present inside the game executable. Having said that, in many mods they are their own separate files. To get them, the executable first needs to be decompressed first, then these offsets used to extract them.

EBNF

// All multi-byte ints are little-endian.

graph_head = { graph offset }, graph length

graph length = 3 byte int // Matches length of egagraph file.

graph offset = 3 byte int

graph_dict = { huffman node }

huffman node = node side, node side // Left, right.

node side = node value, node type

node value = byte

node type = leaf | node // Byte: 0 = leaf, else = node.

egagraph = { chunk }

egagraph = unmasked picture table chunk with header,

masked picture table chunk with header,

sprite table chunk with header,

font a chunk with header,

font b chunk with header,

font c chunk with header,

{ unmasked picture chunk with header }, // Count from unmasked picture table.

{ masked picture chunk with header }, // Count from masked picture table.

{ sprite chunk with header }, // Count from sprite table.

unmasked 8x8 tiles chunk without header, // One chunk for all tiles.

masked 8x8 tiles chunk without header, // One chunk for all tiles.

{ unmasked 16x16 tile chunk without header },

{ masked 16x16 tile chunk without header },

{ text etc }

chunk without header = huffman encoded chunk

chunk with header = chunk decompressed length, huffman encoded chunk

chunk decompressed length = 4 byte int

picture table = { picture table entry }

picture table entry = width_pixels_divided_by_8, height_pixels

width_pixels_divided_by_8 = 2 byte int

height_pixels = 2 byte int

sprite table = { sprite table entry } // 18 bytes each.

sprite table entry = width_div_by_8, // All are 2 byte ints.

height,

x offset,

y offset,

clip left,

clip top,

clip right,

clip bottom,

shifts

image = picture | tile | sprite

unmasked image = red plane, green plane, blue plane, intensity plane

masked image = red plane, green plane, blue plane, intensity plane, mask plane

map_head = rlew key, { map header offset }

rlew key = 2 bytes

map header offset = 4 byte int // 0 means no map in this slot.

gamemaps = "TED5v1.0", { map }

map = map planes, map header

map header = background plane offset, // 38 bytes.

foreground plane offset,

sprite plane offset,

background plane length,

foreground plane length,

sprite plane length,

tile count width,

tile count height,

map name

plane offset = 4 byte int

plane length = 2 byte int

tile count = 2 byte int

map name = 16 bytes asciiz

map planes = background carmackized plane,

foreground carmackized plane,

sprite carmackized plane

carmackized plane = carmackized decompressed length, carmackized data

carmackized decompressed length = 2 byte int

carmackized data = carmack compressed(rlew plane)

rlew plane = rlew decompresed length, rlew data

rlew decompressed length = 2 byte int

rlew data = rlew compressed(decompressed plane)

decompressed plane = { map plane row }

map plane row = { map plane element }

map plane element = 2 byte int

Image planes

- Images are stored in EGA planes.

- Data is one whole-image plane, then the next plane, and so on.

- Thus a certain pixel is represented 4-5 times across the data.

- Masked image planes: RGBIM.

- Red, Green, Blue, Intensity, Mask.

- Unmasked image planes: RGBI.

- When the mask bit = 1, it is a transparent pixel.

- Each pixel in a plane is represented by 1 bit.

- Inside each byte, pixels are left->right in big-endian order, 0x80 being leftmost.

- All widths are multiples of 8 so you don’t have to worry about rows starting mid-byte.

Map elements

- Map plane elements are ints representing which tile is displayed at that position.

- Background plane corresponds to the unmasked tiles.

- Foreground plane corresponds to the masked tiles.

- Foreground plane elements are not always present. 0 means no element here. Which means that to represent the first masked tile, the value is 1. This means that you -1 the value to get the tile index.

- Background plane always has an element, so the above -1 does not apply.

RLEW / Huffman / Carmackization

These compression techniques are big topics, far too complex for EBNF, and out of scope for an article like this.

They are are probably best described in code, which also has links to further reading. Hopefully the following code is readable enough to communicate the how-to:

Summary

I know this is the most random topic imaginable. Still, thanks for reading, I pinky promise this was written by a human, not AI, hope you found this fascinating if not useful, at least a tiny bit, God bless!

You can read more of my blog here in my blog archive.

Chris Hulbert

(Comp Sci, Hons - UTS)

Software Developer (Freelancer / Contractor) in Australia.

I have worked at places such as Google, Cochlear, CommBank, Assembly Payments, News Corp, Fox Sports, NineMSN, FetchTV, Coles, Woolworths, Trust Bank, and Westpac, among others. If you're looking for help developing an iOS app, drop me a line!

Get in touch:

[email protected]

github.com/chrishulbert

linkedin